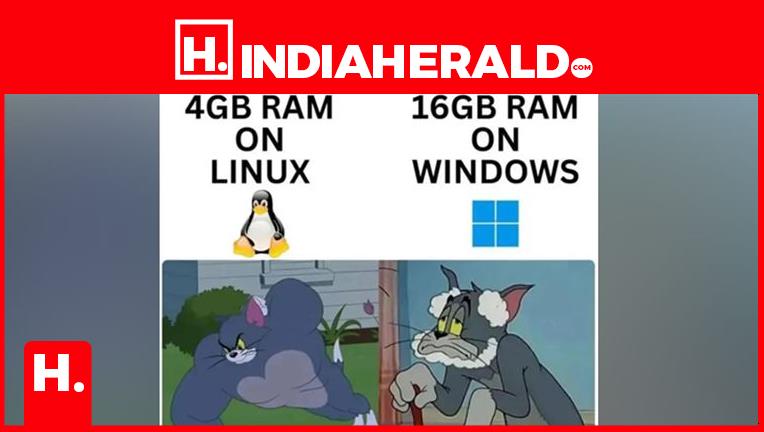

Bohodev.com – In a computing landscape dominated by ever-increasing hardware specifications, a persistent and counterintuitive phenomenon continues to baffle users and experts alike: a modest laptop equipped with a mere 4 GB of RAM running a Linux distribution can deliver a snappy, responsive experience, while a newer machine boasting 16 GB of RAM under Windows 10 or 11 often struggles with lag, fan noise, and inexplicable memory consumption. This is not merely anecdotal evidence or the province of open-source evangelists; it is a demonstrable reality rooted in fundamentally divergent operating system philosophies. The disparity reveals a core truth about modern computing—raw hardware specs are often secondary to how efficiently an operating system manages and respects those resources from the moment of boot.

The divergence begins at the most fundamental level: system initialization. By the time a modern Windows desktop finishes loading, it has already deployed a small army of background services. These include, but are not limited to, real-time search indexing, multiple update orchestrators, extensive telemetry and diagnostic pipelines, and compatibility layers for legacy software. Individually, each service is designed to be lightweight, but collectively, they can pre-allocate gigabytes of RAM before the user launches a single application. This “ready-for-anything” approach assumes the user will benefit from these features, effectively turning idle RAM into a commodity reserved for the system’s own speculative tasks.

In stark contrast, the boot philosophy of most mainstream Linux desktop environments is one of radical minimalism. The core system initializes hardware, loads essential drivers, and presents the desktop. Crucially, it then stops. There is no default file indexing unless the user explicitly enables it. Telemetry is virtually non-existent in most distributions. The system makes no assumptions about what the user might need later, refusing to pre-emptively consume resources for unrequested convenience features. Consequently, free RAM on a Linux system is genuinely free, held in reserve as a pristine resource for the applications the user consciously decides to run.

This foundational difference directly translates to the user experience of performance, particularly under memory pressure. Speed is less about the total RAM available and more about how soon the system must begin the costly process of swapping data to disk or aggressively reallocating memory. Windows reaches this pressure point sooner because a significant portion of RAM is already spoken for. Linux, by preserving RAM, delays this moment dramatically. On resource-constrained hardware, this means applications launch faster and run more smoothly on Linux, as the system isn’t constantly pausing them to manage memory contention caused by its own background operations.

Ultimately, this paradox highlights a design ethos clash: “user-first” versus “everyone-first.” Windows is engineered as a monolithic platform attempting to serve every conceivable user—from enterprise administrators and gamers to touch-device users and legacy business software operators—simultaneously. Linux distributions, particularly desktop-focused ones, often prioritize user control and transparency, scaling down elegantly by doing less by default. The result is that on aging or modest hardware, the perceived speed advantage of Linux stems not from superior engineering in a vacuum, but from a disciplined, less presumptuous approach to resource management that keeps the foreground user experience paramount.